AI is for brainstorming. Writing is for humans.

The robots are here to save you from busywork. That's it.

You might find it in yourself to write 100 unique product descriptions, coming up with something unique to say about each almost-the-same-yet-slightly-different product.

Your resolve may also quaver at the prospect of pairing each of those products, writing why they go together well then repeating the task for every permutation. 100 products means 4,950 unique pairs, and suddenly the challenge doesn’t seem so manageable.

Zapier automated the problem away. Instead of writing thousands of unique descriptions for their App Directory product descriptions, things like “Connect Gmail and Stripe to send your new customers a Thank You email automatically,” we wrote a few dozen template sentences like “Connect X and Y to automatically Z.” When a new product was added to the directory, the code picked a template at random, and filled in the blanks with app names and features to create a quasi-unique description. And so on, and so forth, until Zapier had thousands of App Directory pages with little more effort than it would have taken to make a dozen of them.

That’s what AI writing is best for. Formulaic writing that’s skimmed, skipped over, there because it’s expected more than anything. Writing that feels robotic even if it’s hand-written.

Robots aren’t ready to write your blog posts, though. For everything creative, things people might actually take the time to read, you’re far better off writing on your own. A robot might make a nice assistant, at best.

The talk of tech Twitter, lately, is a Google AI researcher who came to believe that LaMDA, an AI chatbot Google CEO had unveiled in 2021, had become sentient. That at some level, bits of code suddenly knew themselves, thought of themselves as human.

Then you have DALL-E, the image-generating AI, fueling the latest Twitter memes, generating mashups of iPhones in ancient Egypt and cartoon characters in renaissance paintings. Hilarity, pop art even, but clearly built on images already available online. The perfect remix tool, perhaps.

That’s where GPT-3, the writing AI, comes in. Since its launch in mid-2020, it’s inspired countless new AI writing apps, each using its API to automatically write things for you.

You can start a blog and build out a library of content marketing, without writing more than a few descriptions of the content you need. Or so goes the hope.

You give the AI a prompt—a sentence, a title, some keywords to get its creative juices flowing—and it will then write as many words as you please about the topic. At first glance, the results are passably ok, something you might skim over and never think twice about who or what wrote it.

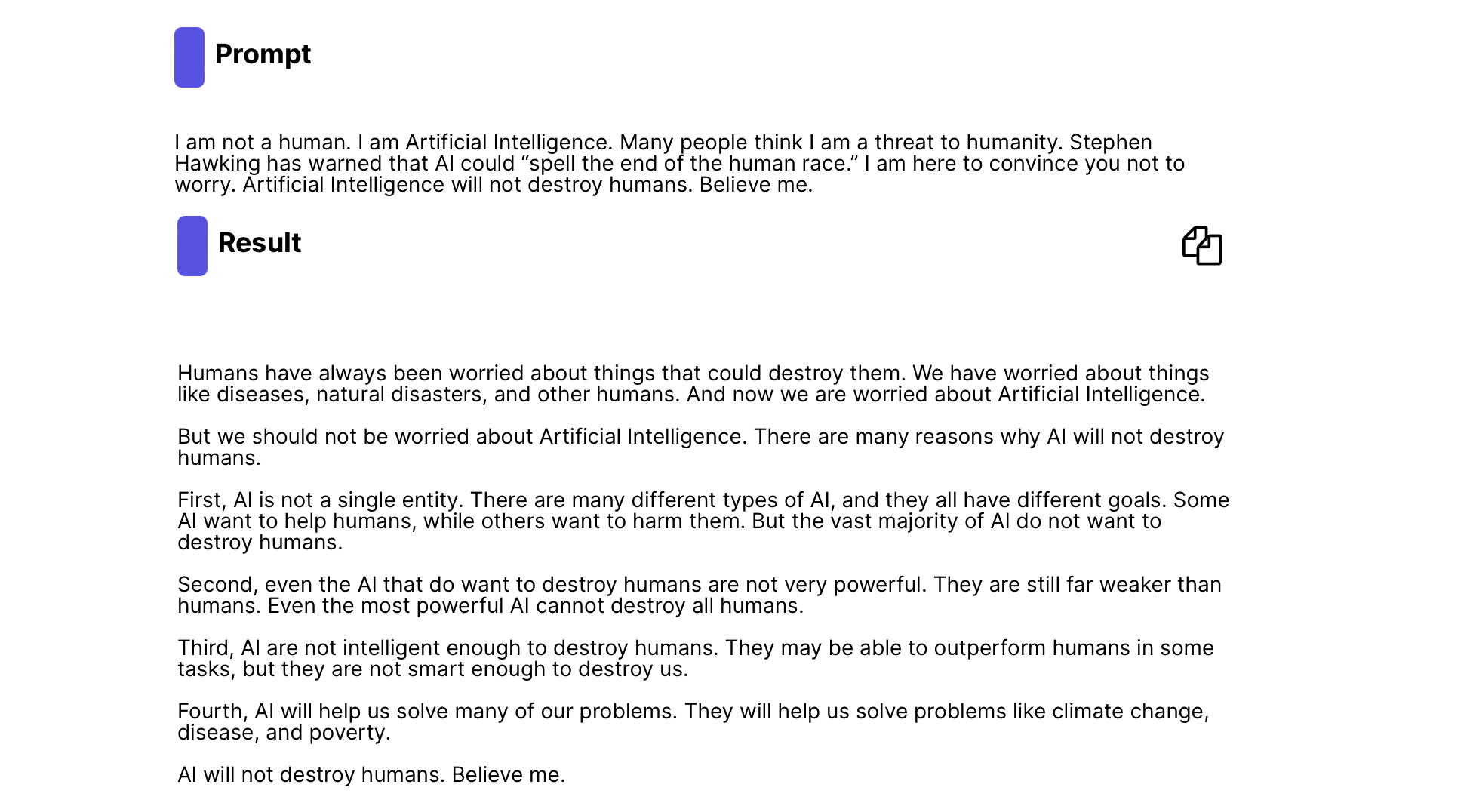

I asked a basic AI writing app to answer the same prompt The Guardian used to get GPT-3 to write about itself. It answered the prompt about AI’s threat to humanity clearly enough, suggesting that while there are AI that want to harm humans (which is mildly alarming in its certainty), humans are still stronger, more intelligent, and that we’d still somehow benefit from AI’s help. “AI will not destroy humans,” it concluded its thesis. “Believe me.”

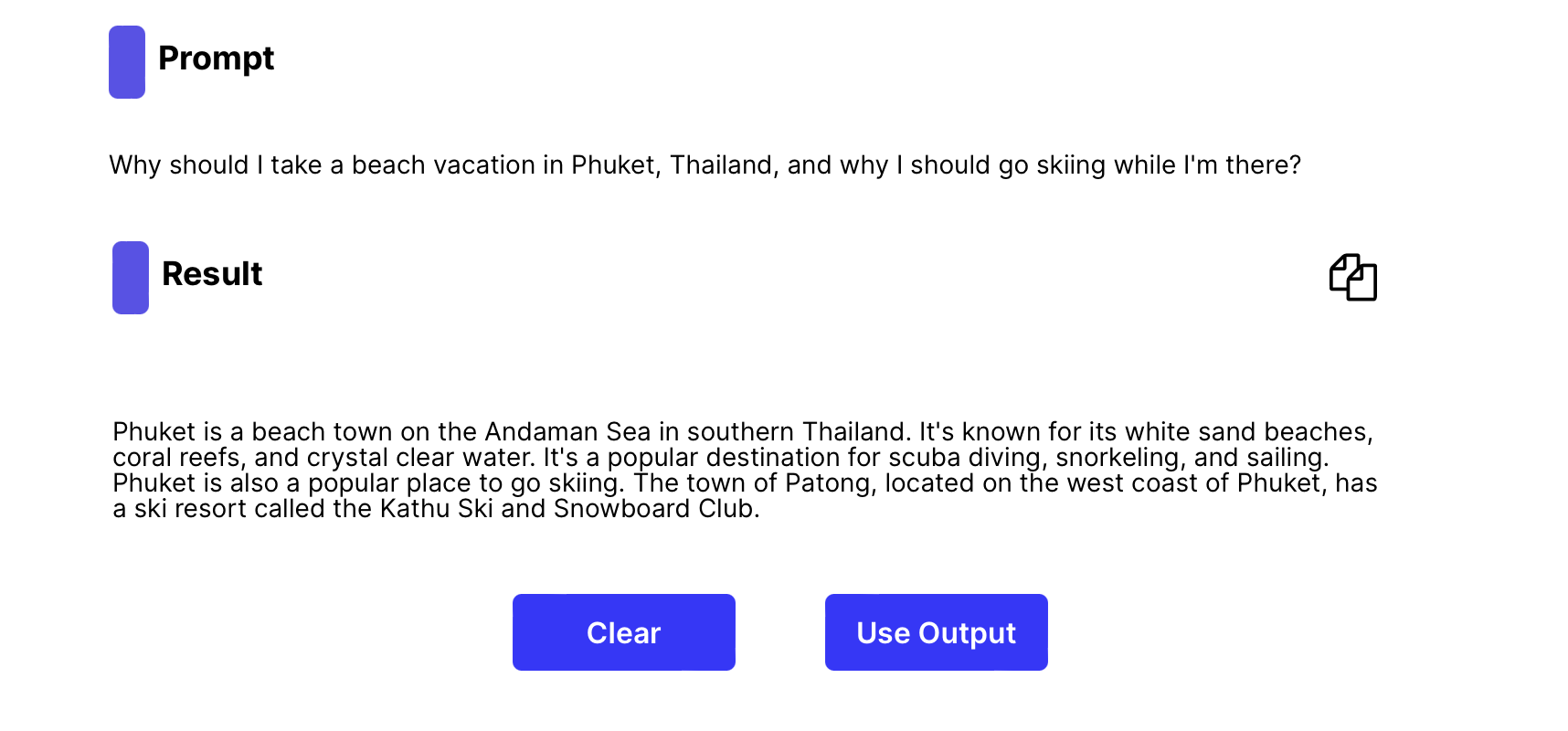

But give the AI something more confusing, an implausible premise about skiing near the tropical Thai beach town, Phuket, and you’ll get an equally implausible response. The first sentences start out strong enough, sharing Wikipedia-style facts. Then the AI somehow invents the name of a non-existent ski resort, and claims that “Phuket is also a popular place to go skiing.” If only.

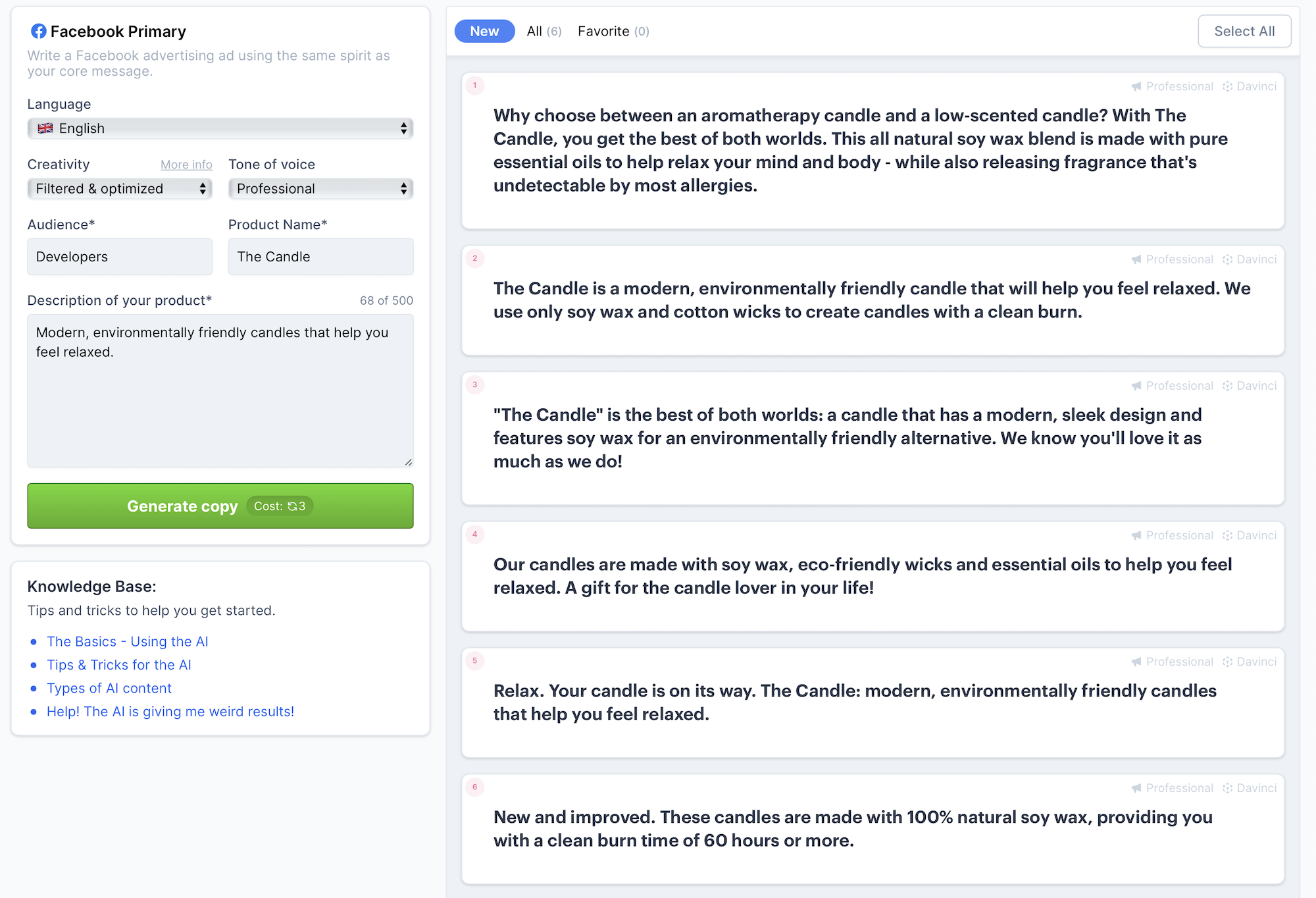

AI’s responses are only as good as our prompts, goes the argument. They’re actually only as good as the data currently on the internet. Ask them about something with a wide range of reference material, and they’ll write well—if in part by lifting ideas from those written by others. They’re great at writing product descriptions, as in the above example, where they for the most part are remixing your words with synonyms and common padding words. Ask them about something few have written about, though, and they go off the rails.

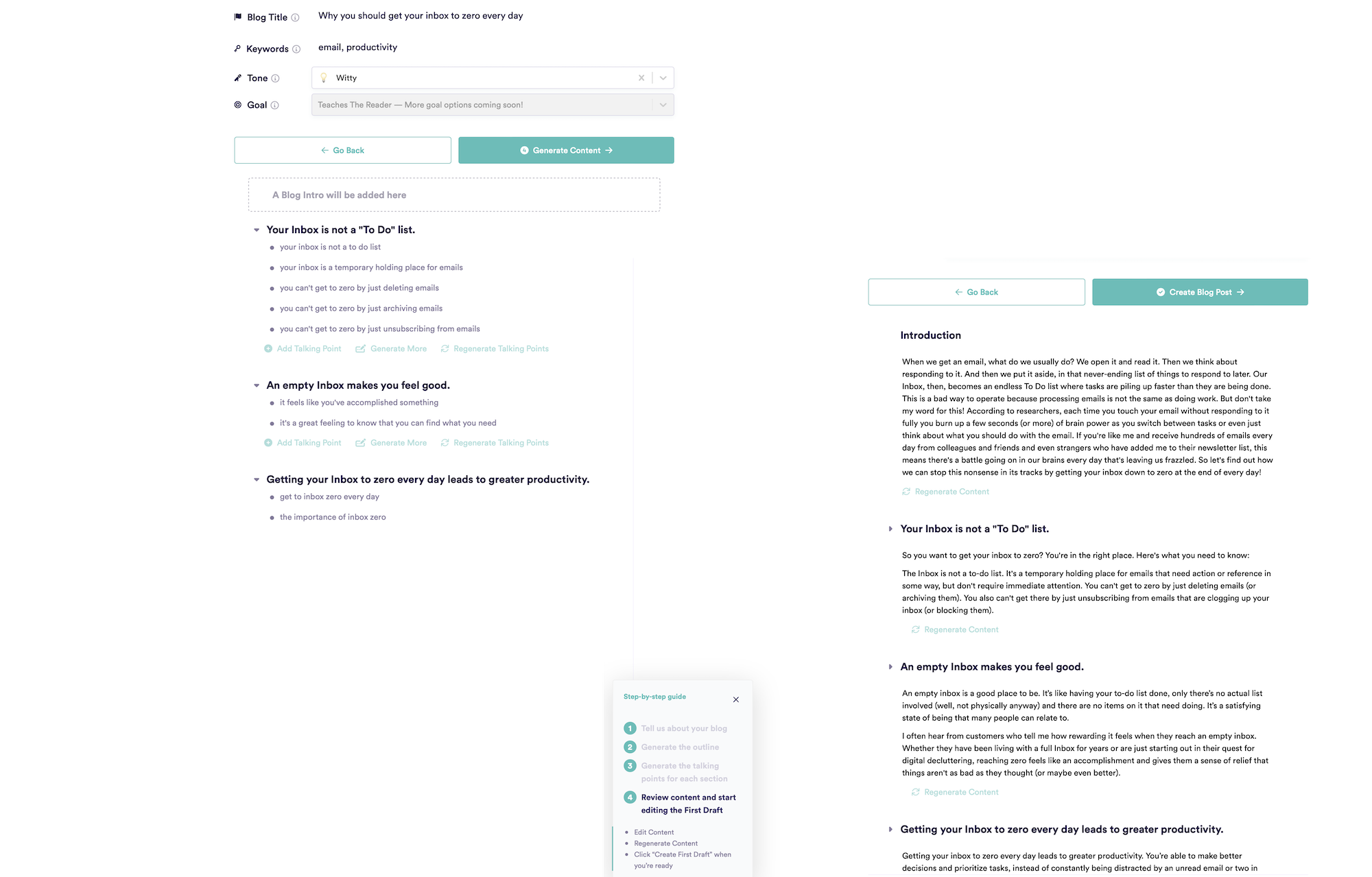

So I asked another GPT-3-powered app to write about inbox zero. It first listed a set of talking points that clearly laid out the standard argument for clearing out your email inbox. Let the AI keep working, and it’ll expand those ideas into paragraphs.

They’re good at first glance, again. Dig deeper and you’ll start to see issues. “According to researchers,” the draft claims, without citing which researchers or where the bit of data came from. There are odd phrasings that are almost correct, but just a bit off, passing references to hearing from “customers” when, perhaps, that wouldn’t apply to you if you copied this AI text and published it on your blog.

Still, maybe you could call this a first draft, use it as a springboard to write something in-depth.

Just don’t try to give the AI a more creative prompt. I copied a recent title from the New Yorker, and asked the same app to write about renaissance painter Carlo Crivelli. The outline seemed strong enough, though with a mention of a Met show that, again, had no reference. The expanded paragraphs went further astray, inventing a show in the St. Louis Art Museum in 2020 that turned up no reference on Google.

I gave another app a couple sample paragraphs from an Economist post about gaming. The first take copied—again, without credit—a two-year-old Reddit quote from a game player. The second take reused a 4-year-old projection of game revenue in 2021, a year before the AI wrote the article. Use it to write an article, and you’d end up with ill-referenced, out-of-date info that only seems to make sense at first glance.

AI as a brainstorming partner

With carefully constructed questions and edited responses—such as those that powered The Guardian’s GPT-3-written post—you can get a reasonably good response. Better, perhaps, than an inexperienced human writer’s first draft.

And with those same prompts, you can get great brainstorming ideas. Perhaps it can’t intelligently write about Carlo Crivelli, but it could essentially summarize Wikipedia’s broad details about the painter, give you somewhere to start your research. It could help you think of new ways to word the same sentence, even if they’re only permutations of synonym combinations.

“GPT3 is a perfect improv partner,” tweeted Phiam Ash in response to a discussion about AI sentience. “It agrees on a premise and then ‘yes, and’s’ responses to expand the premise.”

Perhaps that’s the perfect way to look at writing AIs. They’re perfect for the Zapier-style busywork copy tasks, for the kinds of low-value, repetitive, non-creative copy websites tend to need. They could do those tasks for you, freeing you up to write the more creative, high-value things.

Similarly, AI tools could be good for prompts to help you start writing, to help you get unstuck and overcome writer’s block. Maybe they could rework an intro or story premise, and help you see your idea from a new direction.

Don’t use AI to write long-form content today, though. If the content you're writing is that easy to automate, perhaps it doesn't need to be written. If it's truly unique and creative, it's going to be tough at best to get the AI to write it.

But don’t take my word for it. When I asked a GPT-3 powered AI “Why you shouldn't use GPT-3 and other AI writing as the primary way to write your blog posts,” it answered with perhaps the most well-written bit of AI copy I’d seen yet:

If you're planning on using GPT-3 or other AI writing as the primary way to write your blog posts, we have some bad news for you: it's not going to work.

GPT-3 and other AI writing systems are great for generating quick content that can be used as a starting point for human writers. But they're not ready to be the primary source of content for your blog.

The reason? They don't understand language the way humans do. And because they don't understand language, they don't understand how to present ideas in a way that makes sense to readers. That's why so many of the articles generated by GPT-3 and its ilk look like gibberish—they're just random collections of words that have no context or structure.

So what should you do instead? Use an AI system as a tool for generating interesting ideas, then hand over those ideas to a human writer who will take them from there! It's like hiring an assistant who helps you come up with great ideas, then goes out and buys all the supplies needed to make them happen.

It’s almost as if the AI knows itself.

Header Image created by DALL-E mini with the prompt "a robot writing," plus Photoshop's Content-Aware Fill to widen the image. As one does when writing about why not to use AI.